When I was in graduate school at Northwestern in the early 90s the hot financial topics were all related to finding and estimating risk factors: Arbitrage Pricing Theory via latent factors (Connor and Koraczyk 1986), Kalman filter state-space models (eg, Stock and Watson 1989), and method of moment estimators (Lars Hansen 1982). These appealed to central limit theorem proofs, which is the academic dream, as math proofs decisively prove objective meta-knowledge, how to know important things. Learning such methods involved intelligence and hard work, promising an instant barrier to entry, and certainly not from those boorish B-school students who had much better parties but didn't know statistics, let alone measure theory.

Around that same time, Eugene Fama and Ken French were looking at the returns of low and high dividend stocks by simply sorting them and looking at the basic statistics of these portfolios (see here). While Fama was well-respected for his theoretical work on defining efficient markets and his seminal 1973 paper confirming the capital asset pricing model (ie, the CAPM), he seemed like one of those antediluvian economists who typified the 1970s. Indeed, if you read those old papers, you will be amazed by how simple they were--you can understand them on one read--making the many inevitable false-positives glaringly obvious (with hindsight).

But then in 1992 Fama and French published their blockbuster 3-factor model documenting that if you sort stocks first by size and then by beta there is no correlation between beta and stocks. The previous finding was the product of an omitted variable, size. That is, one might look at humans and find hair length inversely correlated with height: longer hair correlates with shorter humans. But if you note women have longer hair than men and control for that, the correlation goes away; hair has nothing to do with height.

Size and value were identified in the late 70s, often in accounting journals. Fama and French's analysis was simple but the results were too strong to dismiss, especially as Fama was not some accounting noob, but rather, someone who understood risk and expected returns. Fama and French hypothesized size and value premiums implied they proxied risks that affect investors but academics can't measure, just as we can see the effects of dark matter but not the dark matter itself.

Still, many researchers were burned by false findings, and value and size were discovered in the same naive way of simply finding a strong pattern and presuming it's real as opposed to an order statistic (see here DeBondt and Thaler's list of anomalies circa 1989). While F-F said they were risk proxies, it seemed ad hoc. Economic theory implies risk premiums in that the sine qua non of economics, the utility function, implies a risk premium in an if and only if relation (which implies B ⇒ A and A ⇒ B). [my take: the error here is applying it to an individual's wealth, it works great for ice cream and shoes.] Add to this 20 years where economists learned that the CAPM model had been proven ('a peer-reviewed fact,' they would say today), and you have a strong bias that is not going to be discarded after some simple analysis. You can identify such researchers in the past--many still plying their trade--by how they would introduce their findings via moment conditions (see Harvey and Siddique, 1999), or factor models that identify loadings, factor returns, and the price of risk, λ (see Ang et al 2006).

Yet size and value investing became popular. Dimensional Fund Advisers created a highly successful and influential quant shop, and size and value became ubiquitous as either fund signatures or risk factors, depending on the application. There were dark times, such as when the size factor faltered in the late 80s and mid-90s, or when value stocks got clobbered in the tech bubble, but they always came back: they were risky! The rigorous methodology of latent factors models, Kalman filters, and GMM, meanwhile, produced nothing practical in finance.

Simple models win in the long run, but the short run is always dominated by the obfuscators. Socrates and Jesus criticized the sophists and Pharisees, respectively, because these official intellectuals were sanctimonious hair-splitters, and missed the forest for the trees. Christian apologists were the foremost Medieval scholars, and though now apologist is a derogatory term for a dogmatic thinker, the key is that sophisticated reasoning has always been used to mask dogmatic thinking. Sophisticates use their superior knowledge to out-argue, not find the truth. Complex arguments that are long and hard to follow, and have the support of the most other intellectuals are impossible to rebut. No one is going to spend a lot of time understanding such arguments if they think it's all a waste of time, so everyone who could argue with them was a fellow-believer (eg, I originally wanted to be a macroeconomist, but quickly discerned it was pointless and so today I cannot give a thorough critique of dominant macro models).

Simple models are better at finding the truth because they are easier for others to replicate and test, and this is the best evidence of something that is true and important, because things that work tend to be copied over time. They also expose dopey reasoning and overfitting, because readers can understand them. Complexity does not overcome overfitting, it just hides it better. GMM estimators are rarely criticized for overfitting because it's too hard to tell, and when no one is getting caught for the world's oldest statistical sin you can be sure it is rampant (eg, when the UFC had a steroid problem no one was getting caught).

More importantly, there just are not that many really profound financial ideas one would call complex. Off the top of my head, I think about how volatility is proportional to the square root of time, Black-Scholes, or the convexity adjustment in forwards vs. futures for interest-rates. Yet, these can all be communicated in one sitting to a person who understands basic statistics. That's not true for the fancy methods above.

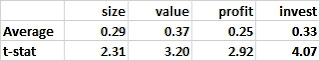

While the Fama-French approach thrived due to its simplicity, we are now in another dark time. Specifically, the 5-factor model introduced by Fama-French in 2015 has reported the following monthly return averages for their factors (excluding the uncontroversial market risk premium):

Since publication, however, the results look like this (note: they should be positive):

Unlike prior dark times, we now also have a boatload of factors in addition to Fama and French's 5. Overfitting is a perennial problem. Unlike 'big data' used by Amazon and Netflix, factor data is orders of magnitude smaller and grows very slowly, all the while many thousands of full-time researchers are looking at the same data for new patterns. They are all highly motivated to find new, better factors, and we all know what happens when you torture the data long enough. The simplicity of the 3-factor Fama-French model was not an equilibrium, as this invited many to ignore some factors and create their own (even Fama and French joined in, adding 2 more). There is now just as much tendentious and disingenuous reasoning among the factor promoters as there was with those old opaque and complex methods, we have just gotten used to it.

This especially goes for equity factors applied at hedge funds, which number at least 20 and include orthogonally estimated style factors but also industry factors that no one thinks have a return premium. Argument by authority is ubiquitous among authorities of all stripes, only among scientists, this tactic is masked by having multiple subtle assumptions justified by references to various well-cited peer-reviewed articles, each of which itself does the same thing, making it impossible to refute because no one has that much time. I have never seen a good argument for how these factors help manage risk, but I understand how they are very useful in explaining why returns were down last month. Pretentious and parochial jargon makes it easier for the common (ie, dumb) risk manager, senior executive, fund advisor, etc., to feel valuable: they know something outsiders do not understand, and those outsiders can't prove it's not blather.

No financial researcher agrees on the canonical set of factors or how best to proxy them. While no one is suggesting Kalman filters, everyone has some subtle twist for their value factor, how to time factors, or their own creation. Failure now has led many to reexamine their factors, worried that they either overfit their models in the past or would be doing so now by changing them. The risk of jumping off a factor just before it rebounds is symmetric to the risk of holding on to one that will join price, accruals, and inflation betas in the history dustbin.

Around that same time, Eugene Fama and Ken French were looking at the returns of low and high dividend stocks by simply sorting them and looking at the basic statistics of these portfolios (see here). While Fama was well-respected for his theoretical work on defining efficient markets and his seminal 1973 paper confirming the capital asset pricing model (ie, the CAPM), he seemed like one of those antediluvian economists who typified the 1970s. Indeed, if you read those old papers, you will be amazed by how simple they were--you can understand them on one read--making the many inevitable false-positives glaringly obvious (with hindsight).

But then in 1992 Fama and French published their blockbuster 3-factor model documenting that if you sort stocks first by size and then by beta there is no correlation between beta and stocks. The previous finding was the product of an omitted variable, size. That is, one might look at humans and find hair length inversely correlated with height: longer hair correlates with shorter humans. But if you note women have longer hair than men and control for that, the correlation goes away; hair has nothing to do with height.

Size and value were identified in the late 70s, often in accounting journals. Fama and French's analysis was simple but the results were too strong to dismiss, especially as Fama was not some accounting noob, but rather, someone who understood risk and expected returns. Fama and French hypothesized size and value premiums implied they proxied risks that affect investors but academics can't measure, just as we can see the effects of dark matter but not the dark matter itself.

Still, many researchers were burned by false findings, and value and size were discovered in the same naive way of simply finding a strong pattern and presuming it's real as opposed to an order statistic (see here DeBondt and Thaler's list of anomalies circa 1989). While F-F said they were risk proxies, it seemed ad hoc. Economic theory implies risk premiums in that the sine qua non of economics, the utility function, implies a risk premium in an if and only if relation (which implies B ⇒ A and A ⇒ B). [my take: the error here is applying it to an individual's wealth, it works great for ice cream and shoes.] Add to this 20 years where economists learned that the CAPM model had been proven ('a peer-reviewed fact,' they would say today), and you have a strong bias that is not going to be discarded after some simple analysis. You can identify such researchers in the past--many still plying their trade--by how they would introduce their findings via moment conditions (see Harvey and Siddique, 1999), or factor models that identify loadings, factor returns, and the price of risk, λ (see Ang et al 2006).

Yet size and value investing became popular. Dimensional Fund Advisers created a highly successful and influential quant shop, and size and value became ubiquitous as either fund signatures or risk factors, depending on the application. There were dark times, such as when the size factor faltered in the late 80s and mid-90s, or when value stocks got clobbered in the tech bubble, but they always came back: they were risky! The rigorous methodology of latent factors models, Kalman filters, and GMM, meanwhile, produced nothing practical in finance.

Simple models win in the long run, but the short run is always dominated by the obfuscators. Socrates and Jesus criticized the sophists and Pharisees, respectively, because these official intellectuals were sanctimonious hair-splitters, and missed the forest for the trees. Christian apologists were the foremost Medieval scholars, and though now apologist is a derogatory term for a dogmatic thinker, the key is that sophisticated reasoning has always been used to mask dogmatic thinking. Sophisticates use their superior knowledge to out-argue, not find the truth. Complex arguments that are long and hard to follow, and have the support of the most other intellectuals are impossible to rebut. No one is going to spend a lot of time understanding such arguments if they think it's all a waste of time, so everyone who could argue with them was a fellow-believer (eg, I originally wanted to be a macroeconomist, but quickly discerned it was pointless and so today I cannot give a thorough critique of dominant macro models).

Simple models are better at finding the truth because they are easier for others to replicate and test, and this is the best evidence of something that is true and important, because things that work tend to be copied over time. They also expose dopey reasoning and overfitting, because readers can understand them. Complexity does not overcome overfitting, it just hides it better. GMM estimators are rarely criticized for overfitting because it's too hard to tell, and when no one is getting caught for the world's oldest statistical sin you can be sure it is rampant (eg, when the UFC had a steroid problem no one was getting caught).

More importantly, there just are not that many really profound financial ideas one would call complex. Off the top of my head, I think about how volatility is proportional to the square root of time, Black-Scholes, or the convexity adjustment in forwards vs. futures for interest-rates. Yet, these can all be communicated in one sitting to a person who understands basic statistics. That's not true for the fancy methods above.

While the Fama-French approach thrived due to its simplicity, we are now in another dark time. Specifically, the 5-factor model introduced by Fama-French in 2015 has reported the following monthly return averages for their factors (excluding the uncontroversial market risk premium):

Since publication, however, the results look like this (note: they should be positive):

Unlike prior dark times, we now also have a boatload of factors in addition to Fama and French's 5. Overfitting is a perennial problem. Unlike 'big data' used by Amazon and Netflix, factor data is orders of magnitude smaller and grows very slowly, all the while many thousands of full-time researchers are looking at the same data for new patterns. They are all highly motivated to find new, better factors, and we all know what happens when you torture the data long enough. The simplicity of the 3-factor Fama-French model was not an equilibrium, as this invited many to ignore some factors and create their own (even Fama and French joined in, adding 2 more). There is now just as much tendentious and disingenuous reasoning among the factor promoters as there was with those old opaque and complex methods, we have just gotten used to it.

This especially goes for equity factors applied at hedge funds, which number at least 20 and include orthogonally estimated style factors but also industry factors that no one thinks have a return premium. Argument by authority is ubiquitous among authorities of all stripes, only among scientists, this tactic is masked by having multiple subtle assumptions justified by references to various well-cited peer-reviewed articles, each of which itself does the same thing, making it impossible to refute because no one has that much time. I have never seen a good argument for how these factors help manage risk, but I understand how they are very useful in explaining why returns were down last month. Pretentious and parochial jargon makes it easier for the common (ie, dumb) risk manager, senior executive, fund advisor, etc., to feel valuable: they know something outsiders do not understand, and those outsiders can't prove it's not blather.

No financial researcher agrees on the canonical set of factors or how best to proxy them. While no one is suggesting Kalman filters, everyone has some subtle twist for their value factor, how to time factors, or their own creation. Failure now has led many to reexamine their factors, worried that they either overfit their models in the past or would be doing so now by changing them. The risk of jumping off a factor just before it rebounds is symmetric to the risk of holding on to one that will join price, accruals, and inflation betas in the history dustbin.